Hello. I have Hi-Link FM225 module for face recognition with FM225_V0.a8_3B version of firmware.

When i send VERIFY 0x12 or ENROLL 0x13 command i receive then MID_NOTE (1) packets with NID_FACE_STATE (1) type which contain s_note_data_face structure. The first five fields of structure are clear to me. The issue i have with pitch, yaw and roll fields. What units of measurement are used?

For example, when I look straight into the camera i get:

PITCH: 146, YAW: 59, ROLL: -17

PITCH: 146, YAW: 59, ROLL: -16

PITCH: 152, YAW: 60, ROLL: -12

When i turn the head from left to right (change yaw) i get:

PITCH: 176, YAW: 71, ROLL: 14

PITCH: 174, YAW: 71, ROLL: 12

PITCH: -171, YAW: 69, ROLL: 23

PITCH: 177, YAW: 70, ROLL: 14

PITCH: 179, YAW: 70, ROLL: 14

PITCH: -171, YAW: 69, ROLL: 23

PITCH: -172, YAW: 69, ROLL: 23

PITCH: -170, YAW: 68, ROLL: 26

PITCH: -176, YAW: 68, ROLL: 20

PITCH: -178, YAW: 66, ROLL: 19

PITCH: 173, YAW: 65, ROLL: 11

PITCH: 177, YAW: 66, ROLL: 15

PITCH: 175, YAW: 66, ROLL: 12

PITCH: 179, YAW: 66, ROLL: 14

PITCH: 179, YAW: 66, ROLL: 16

PITCH: 174, YAW: 65, ROLL: 8

PITCH: 171, YAW: 64, ROLL: 3

PITCH: 173, YAW: 65, ROLL: 7

PITCH: 163, YAW: 62, ROLL: -4

PITCH: 163, YAW: 60, ROLL: -8

PITCH: 150, YAW: 57, ROLL: -19

PITCH: 159, YAW: 59, ROLL: -12

PITCH: 157, YAW: 58, ROLL: -14

PITCH: 148, YAW: 53, ROLL: -25

PITCH: 151, YAW: 55, ROLL: -19

PITCH: 150, YAW: 51, ROLL: -24

PITCH: 144, YAW: 49, ROLL: -26

PITCH: 142, YAW: 47, ROLL: -28

PITCH: 143, YAW: 46, ROLL: -28

PITCH: 136, YAW: 42, ROLL: -29

PITCH: 134, YAW: 41, ROLL: -23

PITCH: 133, YAW: 39, ROLL: -28

PITCH: 128, YAW: 35, ROLL: -28

PITCH: 126, YAW: 32, ROLL: -21

PITCH: 123, YAW: 31, ROLL: -22

PITCH: 124, YAW: 30, ROLL: -21

When I nod my head (change pitch) i get:

PITCH: 133, YAW: 55, ROLL: -29

PITCH: 127, YAW: 52, ROLL: -34

PITCH: 120, YAW: 48, ROLL: -37

PITCH: 122, YAW: 50, ROLL: -36

PITCH: 114, YAW: 43, ROLL: -42

PITCH: 112, YAW: 41, ROLL: -44

PITCH: 112, YAW: 41, ROLL: -44

PITCH: 104, YAW: 33, ROLL: -48

PITCH: 106, YAW: 36, ROLL: -47

PITCH: 100, YAW: 27, ROLL: -50

PITCH: 99, YAW: 25, ROLL: -50

PITCH: 98, YAW: 24, ROLL: -50

PITCH: 99, YAW: 27, ROLL: -51

PITCH: 100, YAW: 28, ROLL: -51

PITCH: 96, YAW: 22, ROLL: -54

PITCH: 99, YAW: 26, ROLL: -48

When I tilt my head (change roll) i get:

PITCH: 132, YAW: 55, ROLL: 0

PITCH: 131, YAW: 55, ROLL: -8

PITCH: 131, YAW: 56, ROLL: -12

PITCH: 140, YAW: 59, ROLL: -12

PITCH: 131, YAW: 56, ROLL: -22

PITCH: 130, YAW: 55, ROLL: -28

PITCH: 135, YAW: 58, ROLL: -32

PITCH: 128, YAW: 54, ROLL: -45

PITCH: 133, YAW: 56, ROLL: -46

PITCH: 141, YAW: 62, ROLL: -47

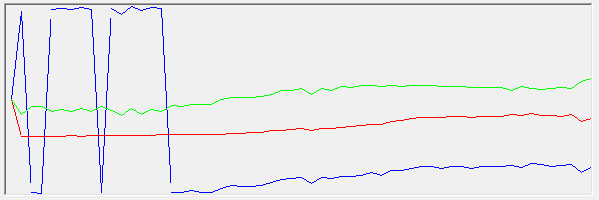

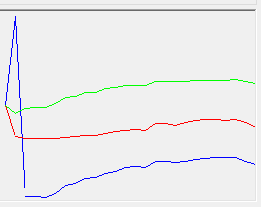

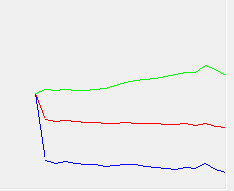

I made the graphs with data (blue - pitch, red - yaw, green - roll).

When turn head from left to right:

When nod the head (from up to down):

When tilt the head:

How to interpret those data? I want to give the voice tips to an user during enrollment process or verification process.

Thanks in advance!